Robust Inference Through Randomization Test Theory And Applications

This article on Randomization Test Theory And Applications presents a practical path to robust inference by foregrounding the randomness intrinsic to experimental design. By examining how outcomes would behave under random reassignment, researchers can draw conclusions that remain reliable even when traditional model assumptions are questionable.

Understanding Randomization Test Theory And Applications helps teams design experiments with stronger inferential guarantees. The focus is on leveraging the randomization mechanism itself to define distributions under the null, enabling nonparametric reasoning that adapts to a wide range of data types and structures.

Foundations of the Theory

At its core, the approach rests on the notion that the randomization process used to assign treatments or conditions creates a natural reference distribution. By treating all admissible random assignments as the basis for comparison, we can assess how unusual the observed statistic is under the null hypothesis. This leads to exchangeability, permutation invariance, and the possibility of exact or highly accurate approximate p-values without heavy parametric assumptions.

Practical Inference Techniques

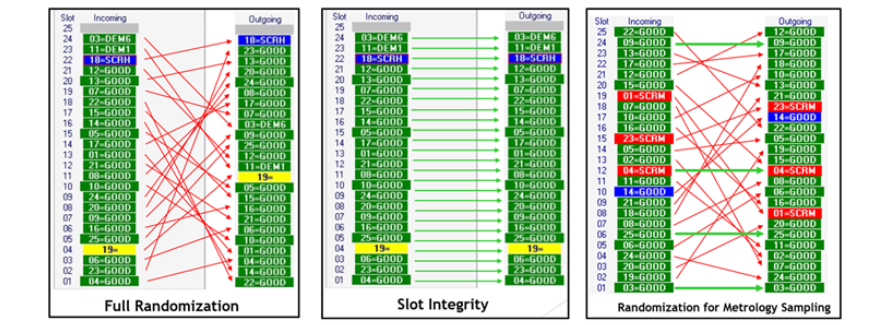

Key tools in this framework include permutation tests, randomization tests, and bootstrap-inspired resampling that respects the original randomization design. When the space of possible randomizations is tractable, exact calculations are feasible; otherwise, Monte Carlo sampling provides scalable estimates of the null distribution. These techniques support robust decision-making in settings like A/B testing, adaptive experimentation, and beyond.

Applications Across Fields

From online product experiments to clinical research, the Randomization Test Theory And Applications paradigm offers a versatile toolkit. In technology, it guards against spurious signals caused by model misspecification or nonstandard error structures. In healthcare, permutation-based inference supports robust endpoints and complex outcomes. In education and social sciences, randomized reasoning helps compare interventions under diverse conditions while preserving interpretability.

Key Points

- Framing inference around the randomization mechanism reduces dependence on distributional assumptions and boosts replicability.

- Permutation-based p-values provide exactness or tight approximations in finite samples, strengthening credibility.

- The framework accommodates complex designs such as blocked, stratified, and hierarchical experiments through conditional randomization schemes.

- Robust effect estimation emerges from combining randomization tests with resampling to form resilient confidence intervals.

- The approach is applicable across diverse domains, from digital experiments to clinical trials, illustrating broad practical utility.

Implementation Considerations

To apply these methods effectively, researchers should align the statistical test with the actual randomization plan. Predefine the test statistic, decide on exact versus approximate enumeration, and ensure adequate computational resources for resampling when needed. Integrating these steps into analysis pipelines enhances transparency and reproducibility.

What is the central idea behind Randomization Test Theory And Applications?

+The central idea is to leverage the randomization process that generated the data to derive inference without heavy model assumptions. By enumerating or sampling the randomization distribution under the null, we assess how extreme the observed statistic is, enabling nonparametric and design-aligned conclusions.

How does randomization testing compare to parametric tests?

+Randomization tests are nonparametric and often offer exact or near-exact p-values in finite samples, making them robust to misspecifications. They rely on the actual randomization scheme rather than assumed distributions, but can be computationally intensive for large spaces and require a well-defined randomization plan.

When should I consider using these methods over traditional inference?

+These methods are particularly advantageous when the data violate common parametric assumptions, when sample sizes are modest, or when the experimental design provides a clear randomization mechanism. They are well-suited for A/B testing, nonnormal outcomes, and analyses where interpretability of the randomization process matters.

What are common limitations and how can I address them?

+Limitations include computational cost for large randomization spaces and sensitivity to the chosen test statistic. Address these by designing efficient sampling schemes, using stratified or blocked randomization to reduce space, and validating the test statistic with simulation studies to ensure reasonable power and interpretability.