In modern deep learning, architectural choices determine throughput, energy consumption, and model accuracy. The concept of Dsa And Isotropic architectures captures a family of design patterns that harmonize data movement with compute in a balanced, uniform way. This article explains how to optimize Dsa And Isotropic systems for efficient deep learning, from algorithmic decisions to hardware-aware tuning. The keyword Dsa And Isotropic should guide you as you align data paths, tiling strategies, and precision choices to meet real-world constraints.

By focusing on the interplay between data flow and compute, teams can reduce bottlenecks, improve cache utilization, and achieve more predictable performance across diverse workloads. The following sections lay out practical steps to harness the benefits of Dsa And Isotropic architectures for both training and inference.

Key Points

- Align compute blocks with isotropic data paths to maximize reuse while minimizing memory traffic in Dsa And Isotropic designs.

- Use isotropy-aware tiling to unlock uniform pipeline occupancy across layers and avoid skewed workloads.

- Apply mixed-precision strategies that preserve accuracy in Dsa And Isotropic workloads to boost throughput.

- Optimize on-chip memory and interconnect topology to reduce off-chip data transfers for Dsa And Isotropic pipelines.

- Co-design algorithms and hardware to exploit regular, predictable workloads inherent to Dsa And Isotropic systems.

Principles for Efficient Dsa And Isotropic Deep Learning

What is Dsa And Isotropic Architecture?

Dsa And Isotropic architectures describe compute pipelines that balance data movement with uniform processing across layers. By aligning signal flow with hardware data paths, these designs reduce latency and energy use while preserving model accuracy. Understanding the fundamentals helps teams select appropriate tiling, memory hierarchy, and precision strategies for both training and deployment.

Data locality and memory bandwidth

Prioritize layouts and memory access patterns that keep data close to compute units, especially for activation maps and weight matrices in Dsa And Isotropic designs. Favor streaming data paths and avoid irregular access patterns that disrupt isotropic performance. When data locality is strong, you can sustain higher sustained throughput with less energy per operation.

Quantization and precision management

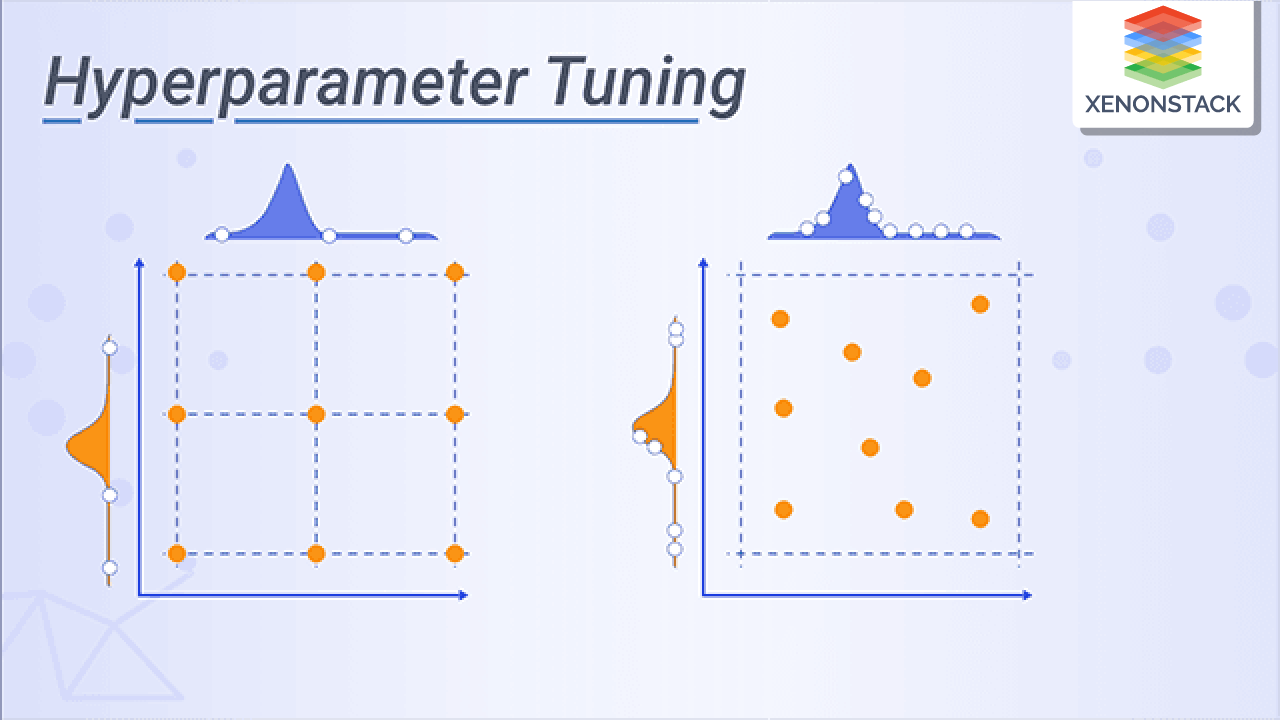

Adopt mixed-precision workflows, such as FP16 or BF16 with selective FP32 guardians, to maintain numerical stability in Dsa And Isotropic workloads. Isotropy helps apply consistent quantization scaling across layers, simplifying calibration and reducing the risk of layer-specific accuracy loss during training and inference.

Hardware-aware tiling and scheduling

Design tiling strategies that map evenly onto SIMD lanes, cache banks, and interconnects. When tiling respects the isotropic nature of the workload, schedulers can achieve higher occupancy and lower stall rates, translating to tangible speedups on real devices.

Regularization of data paths

Standardize data pathways to reduce branching and variability. A predictable, isotropic data flow enables more stable performance across different inputs and batch sizes, which is valuable for deployment in edge and data-center environments alike.

In summary, aligning algorithm design with the Dsa And Isotropic principles and marrying them to hardware realities can yield tangible gains in both throughput and energy efficiency. The strategies above provide a practical path to implementing these architectures in real-world projects.

How do Dsa And Isotropic architectures influence training efficiency?

+Dsa And Isotropic architectures influence training efficiency by balancing compute and data movement. When data paths and compute blocks are aligned isotropically, hardware can better reuse cached data, reduce memory bandwidth bottlenecks, and maintain higher utilization across layers. This often translates into faster training iterations and more predictable energy usage, especially for large-scale models where data movement dominates cost.

<div class="faq-item">

<div class="faq-question">

<h3>What strategies optimize data movement in Dsa And Isotropic designs?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>Strategies include choosing memory layouts that maximize spatial locality, applying fused operations to reduce intermediate buffers, and using tiling that respects isotropy to avoid unbalanced stalls. Prefetching aligned with the isotropic compute pattern and minimizing irregular access patterns also help keep data flowing smoothly through the pipeline.</p>

</div>

</div>

<div class="faq-item">

<div class="faq-question">

<h3>How can one balance isotropy with performance on real hardware?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>Balance is achieved by matching kernel shapes, tiling, and memory layouts to the hardware's burst sizes and cache structure while preserving a uniform compute profile. Selecting appropriate batch sizes and employing hardware-aware quantization can maintain isotropic behavior without sacrificing throughput. Real-world testing across representative workloads is essential to validate the balance.</p>

</div>

</div>

<div class="faq-item">

<div class="faq-question">

<h3>Can quantization be beneficial for Dsa And Isotropic architectures?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>Yes. Quantization reduces memory bandwidth and lowers compute costs, which complements the data-path efficiency of Dsa And Isotropic designs. When done with careful calibration and, if needed, per-layer or per-block scaling, quantization can preserve accuracy while delivering meaningful speedups in isotropic pipelines.</p>

</div>

</div>